Resource Management

The following is a list of the supported virtualization platforms.

Which virtualization platforms are supported?

Support management of KVM, VMware and other virtualization platforms, as well as public cloud platforms such as Alibaba Cloud and Tencent Cloud

What kinds of baremetal servers are supported?

Currently, it mainly supports x86 servers, such as physical servers of Intel and AMD platforms.

How do I set up nested virtualization for servers with VMware ESXi?

Create virtualization platform on OneCloud platform unVMware servers cannot be nested virtualization by default, you need to configure nested virtualization of servers on VMware. The setup is as follows.

-

Modify the configuration file on the ESXi host so that all servers created on the host support nested virtualization.

- Remotely connect to the VMware ESXi host through the ssh service and add the vhv.enable = “TRUE” command at the end of the /etc/vmware/config.

- Restart the ESXi host. If vCenter is deployed in ESXi host, restarting ESXi host may cause the problem of vCenter inaccessibility, please be careful. As ESXi reboots, all servers on ESXi will be shut down and you need to start vCenter servers manually. Wait for some time before vCenter can be accessed normally.

-

Enable nested virtualization for a single server by modifying the settings of the server on vSphere.

- Shut down the server that needs to be enabled for nested virtualization.

- Select the server on the vSphere console to enter the server details page.

- Click the “Action” button to the right of the server name and select the drop-down menu “Edit Settings” menu item to bring up the Edit Settings dialog box.

- Expand the CPU Configuration item in the Virtual Hardware tab, check the “Expose hardware-assisted virtualization to guest OS” in the Hardware Virtualization column, and click the “OK” button.

-

Enable nested virtualization for a single server by replacing the server’s configuration file (xxx.vmx) on vSphere. This step is more complex, so please use the previous one in preference.

- Turn off the server that needs to have nested virtualization enabled.

- View and copy the configuration file for the specified server on vSphere (Server Details - Edit Settings - Server Options - General Options).

- Search for the configuration file in Storage - Files and download the configuration file locally.

- Add the command vhv.enable = “TRUE” at the end of the configuration file to save the configuration file.

- Upload the modified configuration file to the corresponding directory to replace the original configuration file.

- Start the server, and you can see that hardware virtualization is enabled in Server Details - Summary - Server Hardware - CPU on vSphere.

How to turn on hardware virtualization support in BIOS?

Hardware virtualization support is turned on by default in BIOS. If there are changes, you need to turn on Intel Virtual Technology or Secure Server option in BIOS, save and exit.

How to specify the boot kernel of the host computer?

-

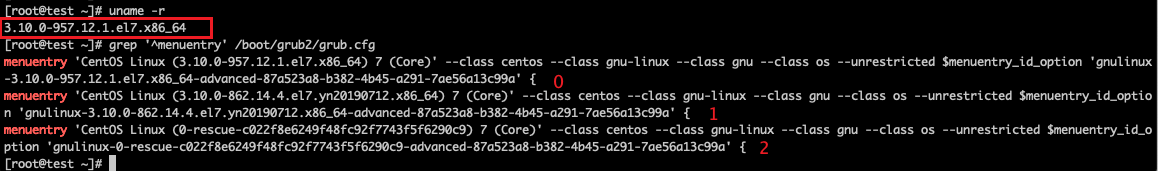

First check the current host’s boot kernel by the following command.

$ uname -r -

View all available kernels on the host with the following command.

$ grep '^menuentry' /boot/grub2/grub.cfg

-

As shown above, the boot kernel of the current host is “3.10.0-957.12.1.el7.x86_64”, and the kernel serial number starts from 0, in order. If you want to specify the boot kernel as “3.10.0-862.14.4.el7.yn20190712.x86_64”, the kernel serial number is 1. Then you need to modify the /etc/default/grub file to add or change the value of GRUB_DEFAULT to 1.

$ vi /etc/default/grub # Press enter insert mode to change the following GRUB_DEFAULT=1 #After modifying, press ESC and then type: wq to save the configuration -

Execute the following command to regenerate the boot menu.

$ grub2-mkconfig -o /boot/grub2/grub.cfg -

Restart the host and check if the host boot kernel has been modified to “3.10.0-862.14.4.el7.yn20190712.x86_64”.

$ uname -r

How to add a new local disk from the host to the storage pool for storing virtual disks for servers?

-

First format that disk partition, it is recommended to partition it with parted and it is recommended to format the disk with ext4.

-

After formatting, mount the disk in any directory under “/opt/cloud/” and modify /etc/fstab to persist the configuration in fstab, it is recommended to use the disk’s UUID to specify the disk, use the blkid command to get the disk’s UUID.

-

After the above operation, modify /etc/yunion/host.conf of the host, find the local_image_path configuration item, and add the new disk’s mount directory path to the array. After saving, restart the host service.

# View the pod where the host service is located and delete it $ kubectl get pods -n onecloud | grep host $ kubectl delete pod <host-pod-name> -n onecloud -

If you have not mounted the disk to the “/opt/cloud/” directory and do not want to modify the mount directory, you can follow the steps below.

# Assuming the previous mount directory is "/data/test", mount "/data/test" to "/opt/cloud/" with the mount --bind command. test" $ mount --bind /data/test /opt/cloud/test -

and modify /etc/fstab to persist the configuration in fstab.

/data/test /opt/cloud/test none defaults,bind 0 0 -

Modify the host’s /etc/yunion/host.conf, find the local_image_path configuration item, and add the new disk’s mount directory path to the array. Save and restart the host service.

# View the pod where the host service is located and delete it $ kubectl get pods -n onecloud | grep host $ kubectl delete pod <host-pod-name> -n onecloud

Note

When the disk is mounted in a directory other than “/opt/cloud” and the local_image_path configuration in /etc/yunion/host.conf has been modified, the storage size shown on the OneCloud platform may not match the real size of the storage. does not match the real storage capacity. In this case, the user should follow step 4 to mount the previous mount directory to the “/opt/cloud” directory, the host and local storage services will become offline, and the new storage will be removed from the OneCloud platform to restore normalcy.

How do I clean up the records of a server on top of a host that has gone offline?

When deleting a Server on the cloud management platform, the controller will request the host service on the host where the Server is located to clean up the configuration file and disk file information corresponding to the Server, and the controller will delete the database records corresponding to the Server only after the cleanup of the Server information on the host is successfully returned.

When the host goes offline due to abnormal reasons (failure or other reasons), when deleting the server on the host on the cloud management platform, the controller requests to delete the server configuration file and other information cannot get the response from the host host service, which will lead to the server deletion failure. In order to delete the server on the offline host, the cloud management platform Climc command provides the interface of server-purge. In the host unavailable state, the server-purge command can be used to clean up the database records of the server.

The server-purge command is used as follows.

Connect to the control node remotely via ssh and execute the following command.

# Simulate the host down operation and disable the host

$ climc host-disabled <host_id>

# Remove server records from the host

$ climc server-purge <server_id>

How do I quickly clean up the database records on top of a host that has gone offline?

Deleting database records from a host requires deleting all Server and local disk information on the host. When the host goes offline, the cloud management platform cannot delete the servers and local disks normally in the interface, and can only delete them one by one in the background using server-purge and disk-purge commands in the control node, which is a troublesome operation to delete. In order to quickly clean up and take down the host database records, the control node of the cloud management platform provides a quick cleanup script clean_host.sh.

The usage method is as follows.

Connect to the control node remotely via ssh and execute the following command.

$ /opt/yunion/scripts/tools/clean_host.sh <host_id>

Why is the physical server Baremetal not managed by the cloud management platform?

Please check and ensure that the physical server meets the following configuration items.

-

The physical server boots to legacy bios, not uefi.

-

The server supports network boot and the boot order is pxe first. pxe boot may require a corresponding NIC configuration, such as a Dell server.

-

If the physical server to be managed is in the same network segment as the barametal server, you need to ensure that the switch configuration is the same on both ends, such as the ports are set to access mode and the pvid is the same. At the same time, you need to start the host service and turn on the dhcp relay function so that the host service can receive dhcp broadcast requests and relay to the baremetal service.

-

If the server to be managed is not in the same network segment as the baremetal server, you need to ensure that the gateway switch or the host service in the same network segment is enabled with dhcp relay.

-

Check the ipmi settings. If you use a separate management port, you need to ensure that the management port network can interoperate with the baremetal server and that pxe requests can reach the baremetal server.

- The HP server does not have IPMI over LAN enabled by default, you need to enable it manually, how to set it: Login to ILO web management interface, select Administration -> Access Settings. Check Enable IPMI / DCIM over LAN on port 623. Check Enable IPMI / DCIM over LAN on port 623.

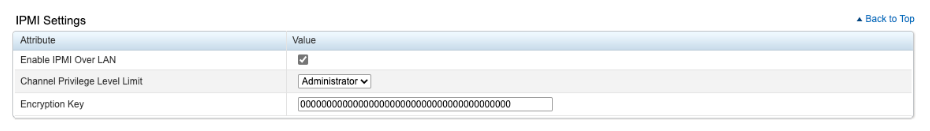

- How to enable IPMI over LAN on Dell/EMC server: Login to iDRAC web management interface, select iDRAC Settings, Network Settings, IPMI Settings, check IPMI over LAN (Enabled).

-

A physical IP subnet segment with sufficient available IP addresses needs to be configured on the cloud management platform. A pxe server needs 3 IP addresses to complete the post-NaaS deployment.