Upgrade from 3.4 to 3.6

Upgrade introduction

This document describes the steps to upgrade from v3.4.x to v3.6.x, and v3.6.x to v3.6.y (y is greater than x).

Cross-version upgrade is recommended from adjacent versions, e.g. to upgrade from 3.3.x to 3.6.x, the following steps are required

- Upgrade from 3.3.x to 3.4.x.

- upgrade from 3.4.x to 3.6.x.

Note

- Version 3.5 is not released to the public, i.e. the next version from 3.4 is version 3.6.

- The upgrade process will upgrade both the control node and the compute node, so please perform the upgrade operation in your free time.

Upgrade from 3.4.x to 3.6.x

Cross-version upgrade currently only supports command line upgrade. There is no network requirement for this method, and the upgrade is performed by mounting the installation package and executing the upgrade command.

Command line upgrade steps

-

The administrator logs in to the First Node remotely as the root user via ssh tool.

-

Switch the user to a non-root user. The root user is not allowed to perform the upgrade operation.

# The installation process will add the yunion user $ su yunion -

Upload the higher version DVD installer to the First Node, mount it and execute the upgrade command.

# Mount the high version DVD installer to the mnt directory $ sudo mount -o loop Yunion-x86_64-DVD-3.x-x.iso /mnt # Switch to the /mnt/yunion directory $ cd /mnt/yunion $ ./upgrade.sh -

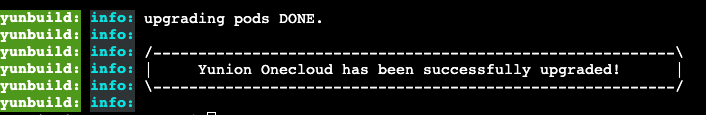

The interface is shown below after successful upgrade.

-

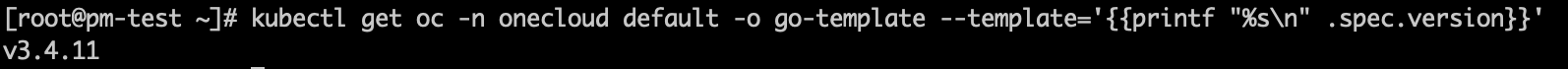

Users can open the About page of OneCloud web console to check the version information or execute the following command to see if the product version is as expected.

$ kubectl get oc -n onecloud default -o go-template --template='{{printf "%s\n" .spec.version}}'

Upgrading from 3.6.x to 3.6.y

Upgrading from 3.6.x to 3.6.y is currently only supported via the command line.

After upgrading from version 3.0 or 3.1 to 3.2, there is a problem that the console can’t access the front-end, how to solve it?

Cause of the problem: The problem is due to the UI migration work in the version before 3.2, resulting in more V1 and V2 version fields in the front-end access URL, while the UI in version 3.2 has all been migrated to V2 version, and the version field has been removed from the front-end access URL. And the environment upgraded from 3.0 and 3.1 to 3.2 version is accessed with the version field by default, resulting in users not being able to access the web front-end.

Solution: Just remove the configmap file of the web service on the First Node and restart the web service.

# Delete the configmap file of default-web

$ kubectl delete configmap -n onecloud default-web

# Delete the default-web container

$ kubectl delete pods -n onecloud default-web-xxx

After upgrading from version 3.0 or 3.1 to 3.2, notify’s pods are not running properly, what should I do to fix it?

Cause of the problem: The problem is caused by the fact that after upgrading to version 3.2, the configmap of the notify service has changed compared to the previous version, and the old configmap was not deleted during the upgrade process.

Solution: Manually delete the notify service’s configmap file on the First Node and restart the notify service.

# Delete the configmap file of default-notify

$ kubectl delete configmap -n onecloud default-notify

# Delete the default-notify container

$ kubectl delete pods -n onecloud default-notify-xxx